Cleaning up a baby peacock sullied by a non-information spill

Cleaning up a baby peacock sullied by a non-information spill

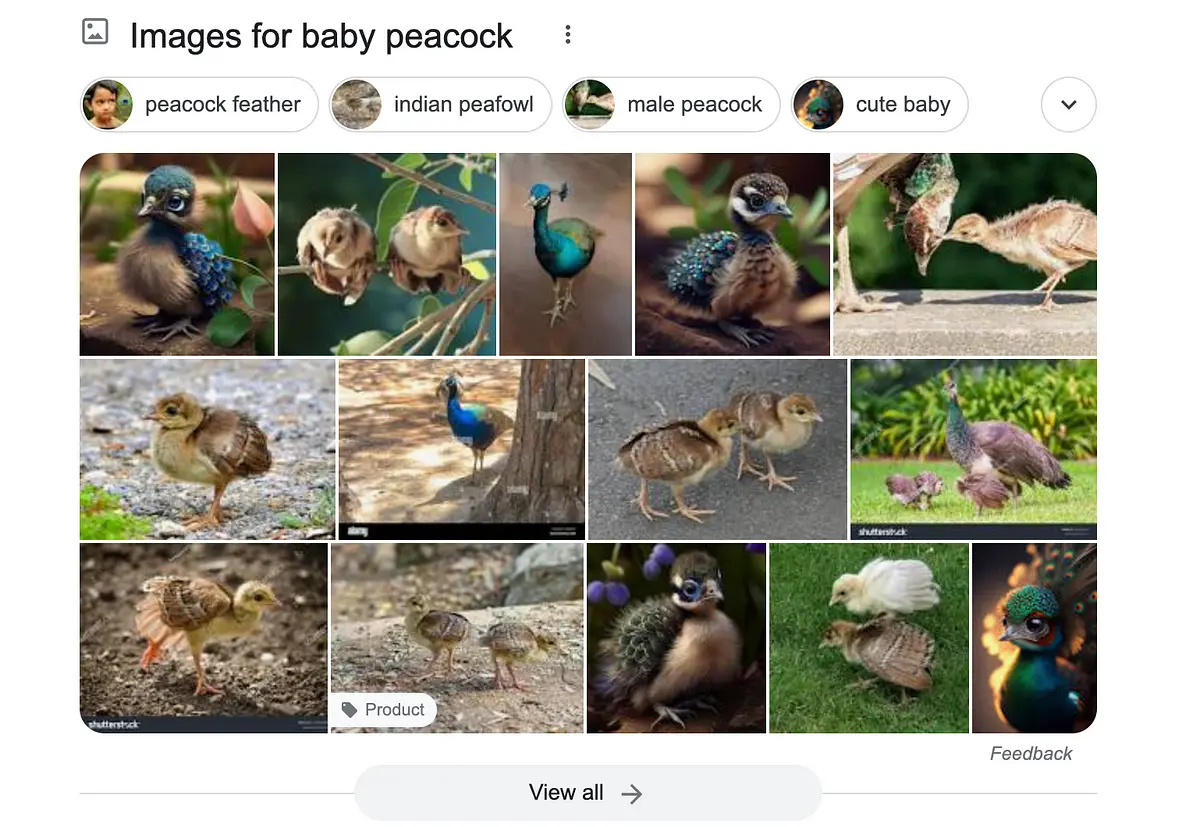

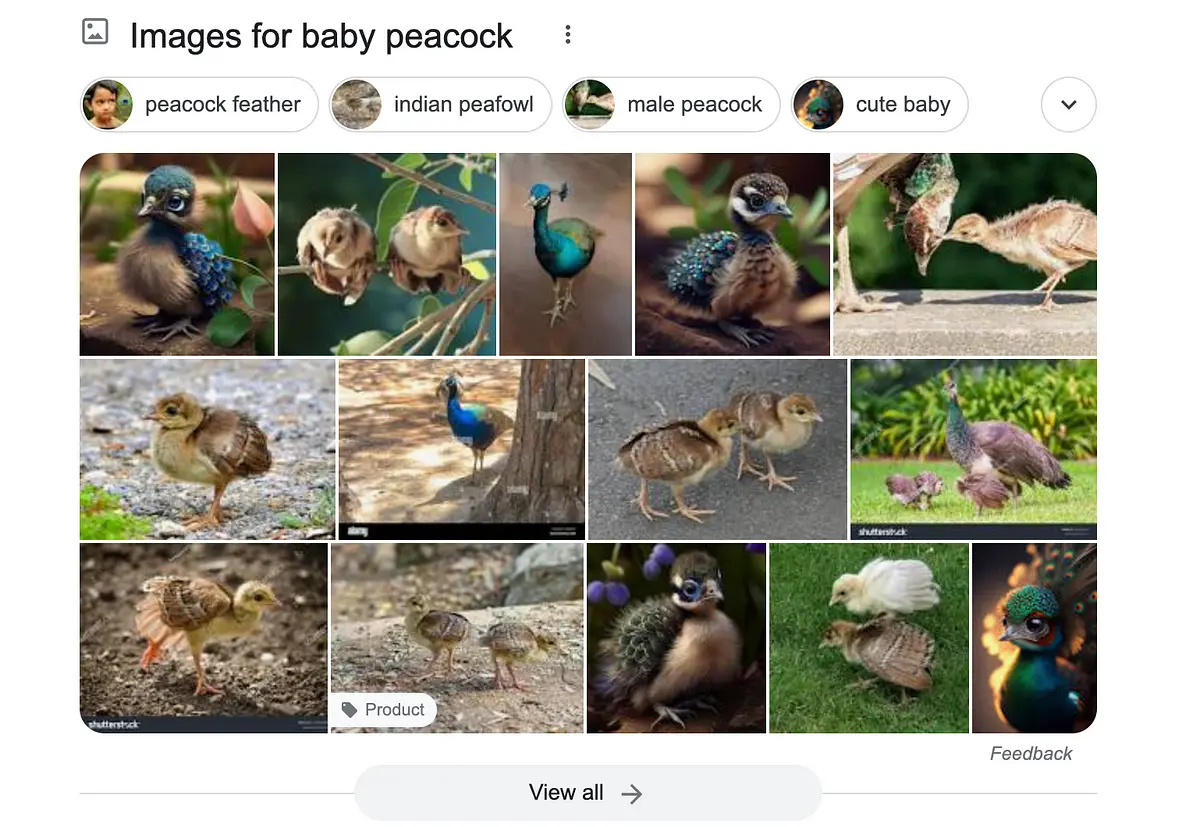

Old article but still relevant. If you search on google, you will see some of the same AI art.

Cleaning up a baby peacock sullied by a non-information spill

Old article but still relevant. If you search on google, you will see some of the same AI art.

Synthetic media should be required to be watermarked at the source

Bit late for that (even in 2023). Best we could do now is something like public key cryptography, with cameras having secret keys that images are signed with. However:

For artists and photographers with old school cameras (“old school” meaning “doesn’t compute and sign a perceptual hash of the image”), something similar could still be done. Each such person can generate a public / private key pair for themselves and sign the images they’ve created manually. This depends on you trusting that specific artist, though, as opposed to trusting the manufacturer of the camera used.