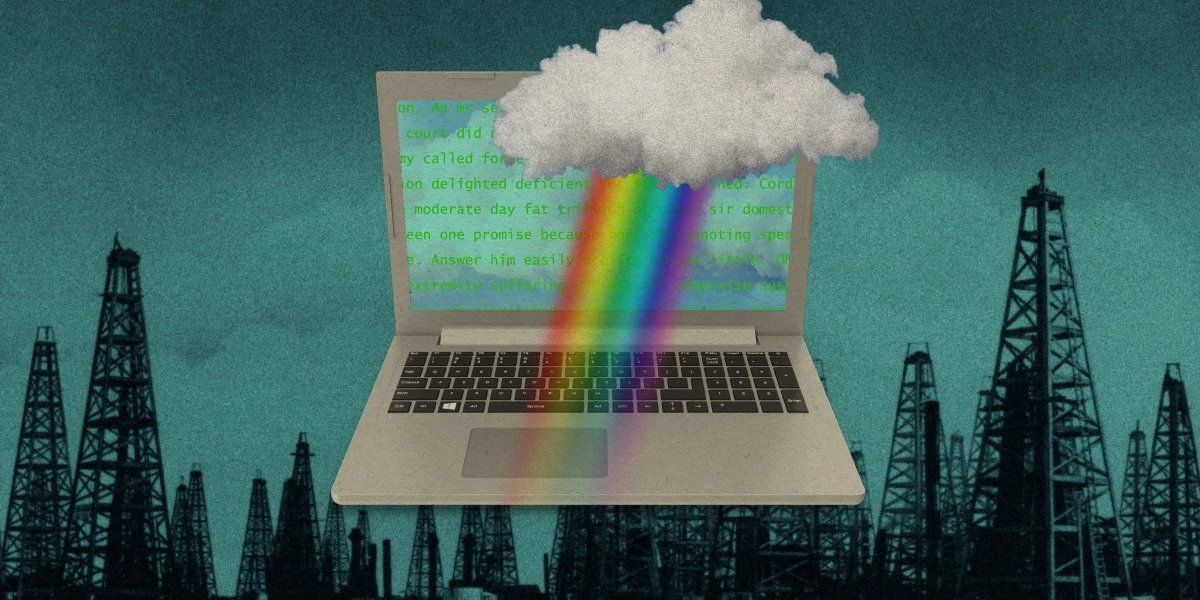

Making an image with generative AI uses as much energy as charging your phone

Making an image with generative AI uses as much energy as charging your phone

www.technologyreview.com

Making an image with generative AI uses as much energy as charging your phone