KT may have had an entire team dedicated to infecting its own customers

A South Korean media outlet has alleged that local telco KT deliberately infected some customers with malware due to their excessive use of peer-to-peer (P2P) downloading tools.

The number of infected users of “web hard drives” – the South Korean term for the online storage services that allow uploading and sharing of content – has reportedly reached 600,000.

Malware designed to hide files was allegedly inserted into the Grid Program – the code that allows KT users to exchange data in a peer-to-peer method. The file exchange services subsequently stopped working, leading users to complain on bulletin boards.

The throttling shenanigans were reportedly ongoing for nearly five months, beginning in May 2020, and were carried out from inside one of KT's own datacenters.

The incident has reportedly drawn enough attention to warrant an investigation from the police, which have apparently searched KT's headquarters and datacenter, and seized evidence, in pursuit of evidence the telco violated South Korea’s Communications Secrets Protection Act (CSPA) and the Information and Communications Network Act (ICNA).

The CSPA aims to protect the privacy and confidentiality of communications while the ICNA addresses the use and security of information and communications networks.

The investigation has reportedly uncovered an entire team at KT dedicated to detecting and interfering with the file transfers, with some workers assigned to malware development, others distribution and operation, and wiretapping. Thirteen KT employees and partner employees have allegedly been identified and referred for potential prosecution.

The Register has reached out to KT to confirm the incident and will report back should a substantial reply materialize.

But according to local media, KT's position is that since the web hard drive P2P service itself is a malicious program, it has no choice but to control it.

P2P sites can burden networks, as can legitimate streaming - a phenomenon that saw South Korean telcos fight a bitter legal dispute with Netflix over who should foot the bill for network operation and construction costs.

A South Korean telco acting to curb inconvenient traffic is therefore not out of step with local mores. Distributing malware and deleting customer files are, however, not accepted practices as they raise ethical concerns about privacy and consent.

Of course, given files shared on P2P are notoriously targeted by malware distributors, perhaps KT the telco assumed its web hard drive users wouldn't notice a little extra virus here and there.

nobody using endgame anymore?

Note: I wanted to give this research a new home – all credits due to the amazing Stephen M. Phillips at Sacred Geometries & Their Scientific Meaning “All things are arranged in a certain order, and this order constitutes the form by which the universe resembles God.” - Dante, Paradiso This secti...

> “All things are arranged in a certain order, and this order constitutes the form by which the universe resembles God.” - Dante, Paradiso

This post reveals the Tree of Life map of all levels of reality, proves that it is encoded in the inner form of the Tree of Life and demonstrates that the Sri Yantra, the Platonic solids and the disdyakis triacontahedron are equivalent representations of this map.

Consciousness is the greatest mystery still unexplained by science. This section presents mathematical evidence that consciousness is not a product of physical processes, whether quantum or not, but encompasses superphysical realities whose number and pattern are encoded in sacred geometries.

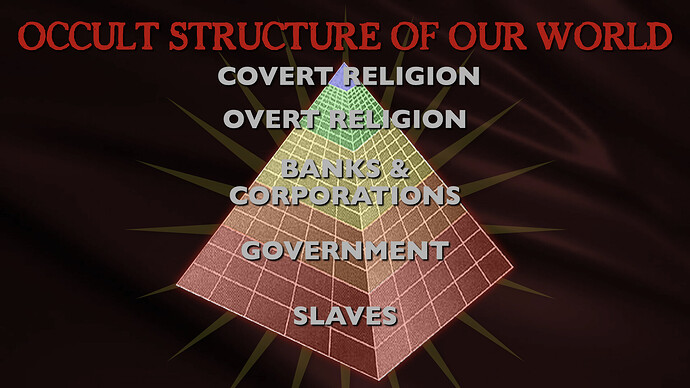

In this epic, all-day presentation, Mark Passio of What On Earth Is Happening exposes the origins of the two most devastating totalitarian ideologies of all time. Mark explains how both Nazism and Communism are but two masks on the same face of Dark Occultism, analyzing their similarities in both mi...

In this epic, all-day presentation, Mark Passio of What On Earth Is Happening exposes the origins of the two most devastating totalitarian ideologies of all time. Mark explains how both Nazism and Communism are but two masks on the same face of Dark Occultism, analyzing their similarities in both mindset and authoritarian methods of control. Mark also delves into the ways in which these insidious occult religions are still present, active and highly dangerous to freedom in the world today. This critical occult information is an indispensable component to any serious student of both world history and esoteric knowledge. Your world-view will be changed by this most recent addition to the Magnum Opus of Mark Passio.

EU halts controversial "chat control" vote amid strong backlash and insufficient support.

The European Union (EU) has managed to unite politicians, app makers, privacy advocates, and whistleblowers in opposition to the bloc’s proposed encryption-breaking new rules, known as “chat control” (officially, CSAM (child sexual abuse material) Regulation).

Thursday was slated as the day for member countries’ governments, via their EU Council ambassadors, to vote on the bill that mandates automated searches of private communications on the part of platforms, and “forced opt-ins” from users.

However, reports on Thursday afternoon quoted unnamed EU officials as saying that “the required qualified majority would just not be met” – and that the vote was therefore canceled.

This comes after several countries, including Germany, signaled they would either oppose or abstain during the vote. The gist of the opposition to the bill long in the making is that it seeks to undermine end-to-end encryption to allow the EU to carry out indiscriminate mass surveillance of all users.

The justification here is that such drastic new measures are necessary to detect and remove CSAM from the internet – but this argument is rejected by opponents as a smokescreen for finally breaking encryption, and exposing citizens in the EU to unprecedented surveillance while stripping them of the vital technology guaranteeing online safety.

Some squarely security and privacy-focused apps like Signal and Threema said ahead of the vote that was expected today they would withdraw from the EU market if they had to include client-side scanning, i.e., automated monitoring.

WhatsApp hasn’t gone quite so far (yet) but Will Cathcart, who heads the app over at Meta, didn’t mince his words in a post on X when he wrote that what the EU is proposing – breaks encryption.

“It’s surveillance and it’s a dangerous path to go down,” Cathcart posted.

European Parliament (EP) member Patrick Breyer, who has been a vocal critic of the proposed rules, and also involved in negotiating them on behalf of the EP, on Wednesday issued a statement warning Europeans that if “chat control” is adopted – they would lose access to common secure messengers.

“Do you really want Europe to become the world leader in bugging our smartphones and requiring blanket surveillance of the chats of millions of law-abiding Europeans? The European Parliament is convinced that this Orwellian approach will betray children and victims by inevitably failing in court,” he stated.

“We call for truly effective child protection by mandating security by design, proactive crawling to clean the web, and removal of illegal content, none of which is contained in the Belgium proposal governments will vote on tomorrow (Thursday),” Breyer added.

And who better to assess the danger of online surveillance than the man who revealed its extraordinary scale, Edward Snowden?

“EU apparatchiks aim to sneak a terrifying mass surveillance measure into law despite UNIVERSAL public opposition (no thinking person wants this) by INVENTING A NEW WORD for it – ‘upload moderation’ – and hoping no one learns what it means until it’s too late. Stop them, Europe!,” Snowden wrote on X.

It appears that, at least for the moment, Europe has.

Global censorship demands.

In a statement issued on the occasion of the “International Day for Countering Hate Speech,” UN Secretary-General Antonio Guterres called for the global eradication of so-called “hate speech,” which he described as inherently toxic and entirely intolerable.

The issue of censoring “hate speech” stirs significant controversy, primarily due to the nebulous and subjective nature of its definition. At the heart of the debate is a profound concern: whoever defines what constitutes hate speech essentially holds the power to determine the limits of free expression.

This power, wielded without stringent checks and balances, leads to excessive censorship and suppression of dissenting voices, which is antithetical to the principles of a democratic society.

Guterres highlighted the historic and ongoing damage caused by hate speech, citing devastating examples such as Nazi Germany, Rwanda, and Bosnia to suggest that speech leads to violence and even crimes against humanity.

“Hate speech is a marker of discrimination, abuse, violence, conflict, and even crimes against humanity. We have time and again seen this play out from Nazi Germany to Rwanda, Bosnia and beyond. There is no acceptable level of hate speech; we must all work to eradicate it completely,” Guterres said.

Guterres also noted what he suggested are the worrying rise of antisemitic and anti-Muslim sentiments, which are being propagated both online and by prominent figures.

Guterres argued that countries are legally bound by international law to combat incitement to hatred while simultaneously fostering diversity and mutual respect. He urged nations to uphold these legal commitments and to take action that both prevents hate speech and safeguards free expression.

The UN General Assembly marked June 18 as the “International Day for Countering Hate Speech” in 2021.

Guterres has long promoted online censorship, complaining about the issue of online “misinformation” several times, describing it as “grave” and suggesting the creation of an international code to tackle it.

His strategy involves a partnership among governments, tech giants, and civil society to curb the spread of “false” information on social media, despite risks to free speech.

New Zealand's push reveals the Five Eyes alliance's massive increase in biometric surveillance on global travelers.

Big Brother might be always “watching you” – but guess what, five (pairs) of eyes sound better than one. Especially when you’re a group of countries out to do mass surveillance across different jurisdictions, and incidentally or not, name yourself by picking one from the “dystopian baby names” list.

But then again, those “eyes” might be so many and so ambitious in their surveillance bid, that they end up criss-crossed, not serving their citizens well at all.

And so, the Five Eyes, (US, Canada, Australia, New Zealand, UK) – an intelligence alliance, brought together by (former) colonial and language ties that bind – has been collecting no less than 100 times more biometric data – including demographics and other information concerning non-citizens – over the last 3 years, since about 2011.

That’s according to reports, which basically tell you – if you’re a Five Eye national or visit out of the UN’s remaining 188 member countries – expect to be under thorough, including biometric, surveillance.

The program is (perhaps misleadingly?) known as the “Migration 5,” (‘Known to One, Known to All” is reportedly the slogan. It sounds cringe, but also, given the promise of the Five Eyes – turns out, other than sounding embarrassing, it actually is.)

And at least as far as the news now surfacing about it, it was none other than “junior partner” New Zealand that gave momentum to reports about the situation. The overall idea is to keep a close, including a biometric, eye on the cross-border movement within the Five Eye member countries.

How that works for the US, with its own liberal immigration policy, is anybody’s guess at this point. But it does seem like legitimate travelers, with legitimate citizenship outside – and even inside – the “Five Eyes” might get caught up in this particular net the most.

“Day after day, people lined up at the United States Consulate, anxiously waiting, clutching the myriad documents they need to work or study in America,” a report from New Zealand said.

“They’ve sent in their applications, given up their personal details, their social media handles, their photos, and evidence of their reason for visiting. They press their fingerprints on to a machine to be digitally recorded.”

The overall “data hunger” between the 5 of these post WW2 – now “criss-crossed” – eyes has been described as rising to 8 million biometric checks over the past years.

“The UK now says it may reach the point where it checks everyone it can with its Migration 5 partners,” says one report.

Civil liberties groups warn of potential overreach and lack of transparency in public surveillance practices.

In the UK, a series of AI trials involving thousands of train passengers who were unwittingly subjected to emotion-detecting software raises profound privacy concerns. The technology, developed by Amazon and employed at various major train stations including London’s Euston and Waterloo, as well as Manchester Piccadilly, used artificial intelligence to scan faces and assess emotional states along with age and gender. Documents obtained by the civil liberties group Big Brother Watch through a freedom of information request unveiled these practices, which might soon influence advertising strategies.

Over the last two years, these trials, managed by Network Rail, implemented “smart” CCTV technology and older cameras linked to cloud-based systems to monitor a range of activities. These included detecting trespassing on train tracks, managing crowd sizes on platforms, and identifying antisocial behaviors such as shouting or smoking. The trials even monitored potential bike theft and other safety-related incidents.

The data derived from these systems could be utilized to enhance advertising revenues by gauging passenger satisfaction through their emotional states, captured when individuals crossed virtual tripwires near ticket barriers. Despite the extensive use of these technologies, the efficacy and ethical implications of emotion recognition are hotly debated. Critics, including AI researchers, argue the technology is unreliable and have called for its prohibition, supported by warnings from the UK’s data regulator, the Information Commissioner’s Office, about the immaturity of emotion analysis technologies.

According to Wired, Gregory Butler, CEO of Purple Transform, has mentioned discontinuing the emotion detection capability during the trials and affirmed that no images were stored while the system was active. Meanwhile, Network Rail has maintained that its surveillance efforts are in line with legal standards and are crucial for maintaining safety across the rail network. Yet, documents suggest that the accuracy and application of emotion analysis in real settings remain unvalidated, as noted in several reports from the stations.

Privacy advocates are particularly alarmed by the opaque nature and the potential for overreach in the use of AI in public spaces. Jake Hurfurt from Big Brother Watch has expressed significant concerns about the normalization of such invasive surveillance without adequate public discourse or oversight.

Jake Hurfurt, Head of Research & Investigations at Big Brother Watch, said: “Network Rail had no right to deploy discredited emotion recognition technology against unwitting commuters at some of Britain’s biggest stations, and I have submitted a complaint to the Information Commissioner about this trial.

“It is alarming that as a public body it decided to roll out a large scale trial of Amazon-made AI surveillance in several stations with no public awareness, especially when Network Rail mixed safety tech in with pseudoscientific tools and suggested the data could be given to advertisers.’

“Technology can have a role to play in making the railways safer, but there needs to be a robust public debate about the necessity and proportionality of tools used.

“AI-powered surveillance could put all our privacy at risk, especially if misused, and Network Rail’s disregard of those concerns shows a contempt for our rights.”

Proposed measures could further erode user privacy under the guise of safety.

Big Tech coalition Digital Trust & Safety Partnership (DTSP), the UK’s regulator OFCOM, and the World Economic Forum (WEF) have come together to produce a report.

The three entities, each in their own way, are known for advocating for or carrying out speech restrictions and policies that can result in undermining privacy and security.

DTSP says it is there to “address harmful content” and makes sure online age verification (“age assurance”) is enforced, while OFCOM states its mission to be establishing “online safety.”

Now they have co-authored a WEF (WEF Global Coalition for Digital Safety) report – a white paper – that puts forward the idea of closer cooperation with law enforcement in order to more effectively “measure” what they consider to be online digital safety and reduce what they identify to be risks.

The importance of this is explained by the need to properly allocate funds and ensure compliance with regulations. Yet again, “balancing” this with privacy and transparency concerns is mentioned several times in the report almost as a throwaway platitude.

The report also proposes co-opting (even more) research institutions for the sake of monitoring data – as the document puts it, a “wide range of data sources.”

More proposals made in the paper would grant other entities access to this data, and there is a drive to develop and implement “targeted interventions.”

Under the “Impact Metrics” section, the paper states that these are necessary to turn “subjective user experiences into tangible, quantifiable data,” which is then supposed to allow for measuring “actual harm or positive impacts.”

To get there the proposal is to collaborate with experts as a way to understand “the experience of harm” – and that includes law enforcement and “independent” research groups, as well as advocacy groups for survivors.

Those, as well as law enforcement, are supposed to be engaged when “situations involving severe adverse effect and significant harm” are observed.

Meanwhile, the paper proposes collecting a wide range of data for the sake of performing these “measurements” – from platforms, researchers, and (no doubt select) civil society entities.

The report goes on to say it is crucial to make sure to find out best ways of collecting targeted data, “while avoiding privacy issues” (but doesn’t say how).

The resulting targeted interventions should be “harmonized globally.”

As for who should have access to this data, the paper states:

“Streamlining processes for data access and promoting partnerships between researchers and data custodians in a privacy-protecting way can enhance data availability for research purposes, leading to more robust and evidence-based approaches to measuring and addressing digital safety issues.”

Masking an attempt to surveil and silence online voices?

These days, as the saying goes – you can’t swing a cat without hitting a “paper of record” giving prominent op-ed space to some current US administration official – and this is happening very close to the presidential election.

This time, the New York Times and US Surgeon General Vivek Murthy got together, with Murthy’s own slant on what opponents might see as another push to muzzle social media ahead of the November vote, under any pretext.

A pretext is, as per Murthy: new legislation that would “shield young people from online harassment, abuse and exploitation,” and there’s disinformation and such, of course.

Coming from Murthy, this is inevitably branded as “health disinformation.” But the way digital rights group EFF sees it – requiring “a surgeon general’s warning label on social media platforms, stating that social media is associated with significant mental health harms for adolescents” – is just unconstitutional.

Whenever minors are mentioned in this context, the obvious question is – how do platforms know somebody’s a minor? And that’s where the privacy and security nightmare known as age verification, or “assurance” comes in.

Critics think this is no more than a thinly veiled campaign to unmask internet users under what the authorities believe is the platitude that cannot be argued against – “thinking of the children.”

Yet in reality, while it can harm children, the overall target is everybody else. Basically – in a just and open internet, every adult who might think using this digital town square, and expressing an opinion, would not have to come with them producing a government-issued photo ID.

And, “nevermind” the fact that the same type of “advisory” is what is currently before the Supreme Court in the Murthy v. Missouri case, deliberating whether what no less than the First Amendment was violated in the alleged – prior – censorship collusion between the government and the Big Tech.

The White House is at this stage cautious to openly endorse the points Murthy made in the NYC think-piece, with a spokesperson, Karine Jean-Pierre, “neither confirming nor denying” anything.

“So I think that’s important that he’ll continue to do that work” – was the “nothing burger” of a reply Jean-Pierre offered when asked about the idea of “Murthy labels.”

But Murthy is – and really, the whole gang around the current administration and legacy media bending their way – now seems to be in the going for broke mode ahead of November.

Some things never change.

If it looks like a duck… and in particular, quacks like a duck, it’s highly likely a duck. And so, even though the Stanford Internet Observatory is reportedly getting dissolved, the University of Washington’s Center for an Informed Public (CIP) continues its activities. But that’s not all.

CIP headed the pro-censorship coalitions the Election Integrity Partnership (EIP) and the Virality Project with the Stanford Internet Observatory, while the Stanford outfit was set up shortly before the 2020 vote with the goal of “researching misinformation.”

The groups led by both universities would publish their findings in real-time, no doubt, for maximum and immediate impact on voters. For some, what that impact may have been, or was meant to be, requires research and a study of its own. Many, on the other hand, are sure it targeted them.

So much so that the US House Judiciary Committee’s Weaponization Select Subcommittee established that EIP collaborated with federal officials and social platforms, in violation of free speech protections.

What has also been revealed is that CIP co-founder and leader is one Kate Starbird – who, as it turned out from ongoing censorship and speech-based legal cases, was once a secret adviser to Big Tech regarding “content moderation policies.”

Considering how that “moderation” was carried out, namely, how it morphed into unprecedented censorship, anyone involved should be considered discredited enough not to try the same this November.

However, even as SIO is shutting down, reports say those associated with its ideas intend to continue tackling what Starbird calls online rumors and disinformation. Moreover, she claims that this work has been ongoing “for over a decade” – apparently implying that these activities are not related to the two past, and one upcoming hotly contested elections.

And yet – “We are currently conducting and plan to continue our ‘rapid’ research — working to identify and rapidly communicate about emergent rumors — during the 2024 election,” Starbird is quoted as stating in an email.

Not only is Starbird not ready to stand down in her crusade against online speech, but reports don’t seem to be able to confirm that the Stanford group is actually getting disbanded, with some referring to the goings on as SIO “effectively” shutting down.

What might be happening is the Stanford Internet Observatory (CIP) becoming a part of Stanford’s Cyber Policy Center. Could the duck just be covering its tracks?

Reaffirming global resistance to government surveillance efforts.

Delta Chat, a messaging application celebrated for its robust stance on privacy, has yet again rebuffed attempts by Russian authorities to access encryption keys and user data. This defiance is part of the app’s ongoing commitment to user privacy, which was articulated forcefully in a response from Holger Krekel, the CEO of the app’s developer.

On June 11, 2024, Russia’s Federal Service for Supervision of Communications, Information Technology, and Mass Media, known as Roskomnadzor, demanded that Delta Chat register as a messaging service within Russia and surrender access to user data and decryption keys. In response, Krekel conveyed that Delta Chat’s architecture inherently prevents the accumulation of user data—be it email addresses, messages, or decryption keys—because it allows users to independently select their email providers, thereby leaving no trail of communication within Delta Chat’s control.

The app, which operates on a decentralized platform utilizing existing email services, ensures that it stores no user data or encryption keys. Instead, it remains in the hands of the email provider and the users, safeguarded on their devices, making it technically unfeasible for Delta Chat to fulfill any government’s data requests.

Highlighting the ongoing global governmental challenges against end-to-end encryption, a practice vital to safeguarding digital privacy, Delta Chat outlined its inability to comply with such demands on its Mastodon account.

They noted that this pressure is not unique to Russia, but is part of a broader international effort by various governments, including those in the EU, the US, and the UK, to weaken the pillars of digital security.

Controversial technology.

The Internal Revenue Service (IRS) has come under fire for its decision to route Freedom of Information Act (FOIA) requests through a biometric identification system provided by ID.me. This arrangement requires users who wish to file requests online to undergo a digital identity verification process, which includes facial recognition technology.

Concerns have been raised about this method of identity verification, notably the privacy implications of handling sensitive biometric data. Although the IRS states that biometric data is deleted promptly—within 24 hours in cases of self-service and 30 days following video chat verifications—skeptics, including privacy advocates and some lawmakers, remain wary, particularly as they don’t believe people should have to subject themselves to such measures in the first place.

Criticism has particularly focused on the appropriateness of employing such technology for FOIA requests. Alex Howard, the director of the Digital Democracy Project, expressed significant reservations. He stated in an email to FedScoop, “While modernizing authentication systems for online portals is not inherently problematic, adding such a layer to exercising the right to request records under the FOIA is overreach at best and a violation of our fundamental human pure right to access information at worst, given the potential challenges doing so poses.”

Although it is still possible to submit FOIA requests through traditional methods like postal mail, fax, or in-person visits, and through the more neutral FOIA.gov, the IRS’s online system defaults to using ID.me, citing speed and efficiency.

An IRS spokesperson defended this method by highlighting that ID.me adheres to the National Institute of Standards and Technology (NIST) guidelines for credential authentication. They explained, “The sole purpose of ID.me is to act as a Credential Service Provider that authenticates a user interested in using the IRS FOIA Portal to submit a FOIA request and receive responsive documents. The data collected by ID.me has nothing to do with the processing of a FOIA request.”

Despite these assurances, the integration of ID.me’s system into the FOIA request process continues to stir controversy as the push for online digital ID verification is a growing and troubling trend for online access.

An Evansville officer's resignation reveals the murky misuse of powerful surveillance tools.

The use of Clearview’s facial recognition tech by US law enforcement is controversial in and of itself, and it turns out some police officers can use it “for personal purposes.”

One such case happened in Evansville, Indiana, where an officer had to resign after an audit showed the tech was “misused” to carry out searches that had nothing to do with his cases.

Clearview AI, which has been hit with fines and much criticism – only to see its business go stronger than ever, is almost casually described in legacy media reports as “secretive.”

But that sits badly in juxtaposition of another description of the company, as peddling to law enforcement (and the Department of Homeland Security in the US) some of the most sophisticated facial recognition and search technology in existence.

However, the Indiana case is not about Clearview itself – the only reason the officer, Michael Dockery, and his activities got exposed is because of a “routine audit,” as reports put it. And the audit was necessary to get Clearview’s license renewed by the police department.

In other words, the focus is not on the company and what it does (and how much of what and how it does, citizens are allowed to know) but on there being audits, and those ending up in smoking out some cops who performed “improper searches.” It’s almost a way to assure people Clearview’s tech is okay and subject to proper checks.

But that remains hotly contested by privacy and rights groups, who point out that, to the surveillance industry, Clearview is the type of juggernaut Google is on the internet.

And the two industries meet here (coincidentally?) because face searches on the internet are what got the policeman in trouble. The narrative is that all is well with using Clearview – there are rules, one is to enter a case number before doing a dystopian-style search.

“Dockery exploited this system by using legitimate case numbers to conduct unauthorized searches (…) Some of these individuals had asked Dockery to run their photos, while others were unaware,” said a report.

But – why is any of this “dystopian”?

This is why. Last March, Clearview CEO Hoan Ton-That told the BBC that the company had to date run nearly one million searches for US law enforcement matching them to a database of 30 billion images.

“These images have been scraped from people’s social media accounts without their permission,” a report said at the time.

EU accelerates controversial "chat control" vote, sparking privacy and censorship concerns.

Bad rules are only made better if they are also opt-in (that is, a user is not automatically included, but has to explicitly consent to them).

But the European Union (EU) looks like it’s “reinventing” the meaning and purpose of an opt-in: when it comes to its child sexual abuse regulation, CSAR, a vote is coming up that would block users who refuse to opt-in from sending photos, videos, and links.

According to a leak of minutes just published by the German site Netzpolitik, the vote on what opponents call “chat control” – and lambast as really a set of mass surveillance rules masquerading as a way to improve children’s safety online – is set to take place as soon as June 19.

That is apparently much sooner than those keeping a close eye on the process of adoption of the regulation would have expected.

Due to its nature, the EU is habitually a slow-moving, gargantuan bureaucracy, but it seems that when it comes to pushing censorship and mass surveillance, the bloc finds a way to expedite things.

Netzpolitik’s reporting suggests that the EU’s centralized Brussels institutions are succeeding in getting all their ducks in a row, i.e., breaking not only encryption (via “chat control”) – but also resistance from some member countries, like France.

The minutes from the meeting dedicated to the current version of the draft state that France is now “significantly more positive” where “chat-control is concerned.”

Others, like Poland, would still like to see the final regulation “limited to suspicious users only, and expressed concerns about the consent model,” says Netzpolitik.

But it seems the vote on a Belgian proposal, presented as a “compromise,” is now expected to happen much sooner than previously thought.

The CSAR proposal’s “chat control” segment mandates accessing encrypted communications as the authorities look for what may qualify as content related to child abuse.

The strong criticism of such a rule stems not only from the danger of undermining encryption but also the inaccuracy and ultimate inefficiency regarding the stated goal – just as innocent people’s privacy is seriously jeopardized.

And there’s the legal angle, too: the EU’s own legal service last year “described chat control as illegal and warned that courts could overturn the planned law,” the report notes.

OpenAI has expanded its leadership team by welcoming Paul M. Nakasone, a retired US Army general and former director of the National Security Agency, as its latest board member.

The organization highlighted Nakasone’s role on its blog, stating, “Mr. Nakasone’s insights will also contribute to OpenAI’s efforts to better understand how AI can be used to strengthen cybersecurity by quickly detecting and responding to cybersecurity threats.”

The inclusion of Nakasone on OpenAI’s board is a decision that warrants a critical examination and will likely raise eyebrows. Nakasone’s extensive background in cybersecurity, including his leadership roles in the US Cyber Command and the Central Security Service, undoubtedly brings a wealth of experience and expertise to OpenAI. However, his association with the NSA, an agency often scrutinized for its surveillance practices and controversial data collection methods, raises important questions about the implications of such an appointment as the company’s product ChatGPT is, through a deal with Apple, about to be available on every iPhone. The company is also already tightly integrated into Microsoft software.

Firstly, while Nakasone’s cybersecurity acumen is an asset, it also introduces potential concerns about privacy and the ethical use of AI. The NSA’s history of mass surveillance, highlighted by the revelations of Edward Snowden, has left a lasting impression on the public’s perception of government involvement in data security and privacy.

By aligning itself with a figure so closely associated with the NSA, OpenAI might raise concerns about a shift towards a more surveillance-oriented approach to cybersecurity, which could be at odds with the broader tech community’s push for greater transparency and ethical standards in AI development.

Secondly, Nakasone’s appointment could raise doubts about the direction of OpenAI’s policies and practices, particularly those related to cybersecurity and data handling.

Nakasone’s role on the newly established Safety and Security Committee, which will conduct a 90-day review of OpenAI’s processes and safeguards, places him in a position of significant influence. This committee’s recommendations will likely shape OpenAI’s future policies, potentially steering the company towards practices that reflect Nakasone’s NSA-influenced perspective on cybersecurity.

Sam Altman, the CEO of OpenAI, has become a controversial figure in the tech industry, not least due to his involvement in the development and promotion of eyeball-scanning digital ID technology. This technology, primarily associated with Worldcoin, a cryptocurrency project co-founded by Altman, has sparked significant debate and criticism for several reasons.

The core concept of eyeball scanning technology is inherently invasive. Worldcoin’s approach involves using a device called the Orb to scan individuals’ irises to create a unique digital identifier.

Gates Foundation's renewed grant to the Alan Turing Institute sparks debate on privacy and security of digital ID systems.

The Gates Foundation continues to bankroll various initiatives around the world aimed at introducing digital ID and payments by the end of this decade.

The scheme is known as the digital public infrastructure (DPI), and those pushing it include private or informal groups like the said foundation and the World Economic Forum (WEF), but also the US, the EU, and the UN.

And now, the UK-based AI and data science research group Alan Turing Institute has become the recipient of a renewed grant, this time amounting to $4 million, given by the Gates Foundation.

This has been announced as initial funding for the Institute’s initiative to ensure “responsible” implementation of ID services.

The Turing Institute is presenting its work that will be financed by the grant over the next three years as a multi-disciplinary project focused on positive issues, such as ensuring that launching DPI elements (like digital ID) is done with privacy and security concerns properly addressed.

But – given the past and multi-year activities of the Gates Foundation, nobody should be blamed for interpreting this as an attempt to actually whitewash these key issues – namely privacy and security – that opponents of centralizing people’s identities through digital ID schemes consistently warn about.

In announcing the renewed grant, the Turing Institute made it clear that it considers implementing “ID services” a positive direction, which according to the organization improves anything from inclusion, access to services and to human rights.

But apparently, some “tweaking” around privacy and security (or at least “enhancing” the perception of how they are handled in digital ID programs) – is needed. Hence, perhaps, the new initiative.

“The project aims to enhance the privacy and security of national digital identity systems, with the ultimate goal to maximize the value to beneficiaries, whilst limiting known and unknown risks to these constituents and maintaining the integrity of the overall system,” the Institute said.

Related: The 2024 Digital ID and Online Age Verification Agenda

A lot of big words, and positive sentiment – but, in simpler words, what the statement amounts to is a promise to somehow “auto-magically” cover all the bases. That is – at once secure the benefits while obliterating the negatives. (Maybe the Institute has a spare bridge to sell, too /s)

The worry here is that this could be yet another Gates Foundation PR blitz aimed at improving the image of the mistrusted, by rights-minded people, “DPI” push – a distrust that in no insignificant part stems from not trusting its biggest proponents as having any genuinely noble intentions to begin with.

An interesting piece of information that we do learn from the announcement is that every year, “billions of dollars are being invested to develop more secure, scalable, and user-friendly identity (digital ID) systems.”

Mozilla sparked debate over its commitment to open internet principles.

Firefox users in Russia can once again install several anti-censorship and pro-privacy extensions, after Mozilla told Reclaim The Net it has reversed its decision to block these add-ons. Previously, developers and users had reported that the extensions were unavailable, suspecting Mozilla, the developer of Firefox, was behind the block.

The extensions in question—Censor Tracker, Runet Censorship Bypass, Planet VPN, and FastProxy—had become unavailable in the Russian market. Initially, it was unclear whether Mozilla made the decision independently or in response to an order from authorities.

One developer from the team behind Censor Tracker had confirmed that the add-on had recently become unavailable in Russia but stated they were unsure why.

Comments on the developer’s post speculated that the decision might have been Mozilla’s.

Russian users attempting to install the add-ons were met with the message, “unavailable in your region,” while these extensions remained accessible in other regions, including the US.

The initial decision and subsequent reversal have sparked discussions within the Firefox community about Mozilla’s guiding principles and their application in today’s regulatory environment.

Nonetheless, the reinstatement of these tools has been welcomed by those who continue to use Firefox for its dedication to privacy.

In a statement to Reclaim The Net, Mozilla announced that it was reversing its decision to block the tools.

“In alignment with our commitment to an open and accessible internet, Mozilla will reinstate previously restricted listings in Russia. Our initial decision to temporarily restrict these listings was made while we considered the regulatory environment in Russia and the potential risk to our community and staff,” the Mozilla spokesperson said. “Mozilla’s core principles emphasize the importance of an internet that is a global public resource, open and accessible to all. Users should be free to customize and enhance their online experience through add-ons without undue restrictions.”

The lawsuit claims the law violates the First Amendment by mandating age verification, risking privacy and online security.

A group associated with big (and smaller) tech companies has filed a lawsuit claiming First Amendment violations against the state of Mississippi.

This comes after long years of these companies scoffing at First Amendment speech protections, as they censored their users’ speech and/or deplatformed them.

We obtained a copy of the lawsuit for you here.

It might seem hypocritical, but at the same time, even a broken clock is right twice a day. In this case, it is the industry group NetChoice that has launched the legal battle (NetChoice v. Fitch), at the center of which is state bill HB 1126 which requires age verification to be implemented on social networks.

NetChoice correctly observes that forcing people (for the sake of providing parental consent) to essentially unmask themselves through age verification (“age assurance”) exposes sensitive personal data, undermines their constitutional rights, and poses a threat to the online security of all internet users.

The filing against Mississippi also asserts that it is up to parents – rather than what NetChoice calls “Big Government” – to, in different ways, assure that their children are using sites and online services in an age-appropriate manner.

HB 1126 is therefore asserted to represent “an unconstitutional overreach,” and if passed, the industry group said, “may result in the censorship of vast amounts of speech online.”

Age verification is a controversial subject almost everywhere it crops up around the world, particularly in those countries that consider themselves democracies.

Another state, Indiana, is being sued on similar grounds – violation of constitutional protections – and for similar reasons, namely, the age verification push.

This time, it’s not done in the name of Big Tech, but by what some reports choose to dub “Big Porn.” Indiana State Attorney General Todd Rokita is named as a defendant in this lawsuit, brought by major porn sites, industry associations, and marketing and production companies.

And while those behind the state law which is about to come into force next month claim it is there to protect minors from adult content (via age verification), the plaintiffs allege that the law breaks not only the First, but also the Fifth, Eighth and 14th Amendments of the US Constitution – and the Communications Decency Act (CDA).

Critics argue Garland's op-ed is an indirect attempt to sway public opinion ahead of the November election.

Some might see US Attorney General Merrick Garland getting quite involved in campaigning ahead of the November election – albeit indirectly so, as a public servant whose primary concern is supposedly how to keep Department of Justice (DoJ) staff “safe.”

And, in the process, he brings up “conspiracy theorists” branding them as undermining the judicial process in the US – because they dare question the validity of a particular judicial process that aimed at former President Trump.

In an opinion piece published by the Washington Post, Garland used one instance that saw a man convicted for threatening a local FBI office to draw blanket and dramatic conclusions that DoJ staff have never operated in a more dangerous environment, where “threats of violence have become routine.”

It all circles back to the election, and Garland makes little effort to present himself as neutral. Other than “conspiracy theories,” his definition of a threat are calls to defund the department that was responsible for going after the former president.

Ironically, while the tone of his op-ed and the topics and examples he chooses to demonstrate his own bias, Garland goes after those who claim that DoJ is politicized with the goal of influencing the election.

The attorney general goes on to quote “media reports” – he doesn’t say which, but one can assume those following the same political line – which are essentially (not his words) hyping up their audiences to expect more “threats.”

“Media reports indicate there is an ongoing effort to ramp up these attacks against the Justice Department, its work and its employees,” is how Garland put it.

And he pledged that, “we will not be intimidated” by these by-and-large nebulous “threats,” with the rhetoric at that point in the article ramped up to refer to this as, “attacks.”

Garland’s opinion piece is not the only attempt by the DoJ to absolve itself of accusations of acting in a partisan way, instead of serving the interests of the public as a whole.

Thus, Assistant Attorney General Carlos Uriarte wrote to House Republicans, specifically House Judiciary Chairman Jim Jordan, to accuse him of making “completely baseless” accusations against DoJ for orchestrating the New York trial of Donald Trump.

While, as it were, protesting too much, (CNBC called it “the fiery reply”) – Uriarte also went for the “conspiracy theory conspiracy theory”:

“The conspiracy theory that the recent jury verdict in New York state court was somehow controlled by the Department is not only false, it is irresponsible,” he wrote.

Garland and FBI Director Chris Wray recently discussed plans to counter election threats during a DoJ Election Threats Task Force meeting. Critics, suspicious of the timing with the upcoming election, cite the recent disbandment of the DHS Intelligence Experts Group.

Mark Passio - Government Is Slavery Mark Passio originally delivered this presentation at The Great Create event in Perry, GA on May 12, 2023. In this talk, Mark lays out the underlying reasons Government is an immoral institution based upon coercion and violence, thus constituting it as a form of ...

Mark Passio originally delivered this presentation at The Great Create event in Perry, GA on May 12, 2023. In this talk, Mark lays out the underlying reasons Government is an immoral institution based upon coercion and violence, thus constituting it as a form of human Slavery. Mark goes on to explain that the only truly just and moral ideological position to align oneself with is that of Abolitionism. The world-view of anyone who watches this presentation is certain to be challenged, and possibly changed forever.

this leads to you not being able to use the internet without associating it with your digital id

absolutely, more resources here! https://git.hackliberty.org/hackliberty.org/Hack-Liberty-Resources

thanks for sharing, Monero is the way.

the modem or mobile router in the car is what can be tracked by telcos via IMEI pings with or without an ESIM. telematics units can be disabled by pulling fuses and you should also call to opt out with most car manufacturers.

Thanks for the post, I've made links.hackliberty.org available over Tor at http://snb3ufnp67uudsu25epj43schrerbk7o5qlisr7ph6a3wiez7vxfjxqd.onion

at least you admit to engaging in association fallacy -- good luck with that

FBI directed big tech to censor the hunter biden laptop story prior to the 2020 election. Is that misinformation too? I recommend you actually do your own research instead of being spoonfed talking points by the mainstream media.

and at the cost of consumer privacy

the conspiracy theorist would say that KYC would give the opportunity for jackboots to kick your door in the minute you use your internet infrastructure to criticize the government

thankfully the internet is a global marketplace

Because of the hard work done by American Patriots, Truth Seekers, and Researchers all over the globe. See for yourself.

Realize that they are just buying data that ANYONE can buy. This is the real danger.

right? we should give every transaction to the state to stop bad guys.

Following the latest batch of court documents, these names have been added:

- Richard Branson

- Sergey Brin

click on the link in the post

One allegation already made public concerns David Copperfield, an associate of both Casablancas and Trump, who judged Look of the Year in 1988 and 1991, and once dated another Elite supermodel, Claudia Schiffer. Two years ago, as the #MeToo movement reverberated through the entertainment industry, he was the subject of allegations by Brittney Lewis, a 17-year-old contestant in the 1988 Look of the Year, held in Japan. According to her account, published on the entertainment news website The Wrap, Copperfield invited her to a show in California after she had returned home to Utah. Lewis alleged that she saw Copperfield pour something into her glass and then blanked out, but says she retained hazy recollections of him sexually assaulting her in his hotel room.

In reference to the court documents, John Casablancas was mentioned only in questioning without any direct allegations, however, I believe he came up in questioning because of his relationship with David Cooperfield, the magician, and pedophile it seems.

Rich people can afford to pay lawyers and evade courts; now the rich and powerful have the support from the captured system.. which is why Epstein was tipped off to his search warrant.