Setting aside stuff like Plan Nine and Manos and The Room and Birdemic, probably Star Trek XI, the one that JJ made. Splicing together test footage of Bela Lugosi and his chiropractor is one thing, but desecrating something beautiful is a sin.

The funny thing about heliocentrism is, that isn't really the modern view either. The modern view is that there are no privileged reference frames, and heliocentrism and geocentrisms are just questions of reference frame. You can construct consistent physical models from either, and for example, you'll probably use a geocentric model if you're gonna launch a satellite.

But another fun one is the so-called discovery of oxygen, which is really about what's going on with fire. Before Lavoisier, the dominant belief was that fire is the release of phlogiston. What discredited this was the discovery of materials that get heavier when burned.

I think it's better to think about what swap is, and the right answer might well be zero. If you try to allocate memory and there isn't any available, then existing stuff in memory is transferred to the swap file/partition. This is incredibly slow. If there isn't enough memory or swap available, then at least one process (one hopes the one that made the unfulfillable request for memory) is killed.

If you ever do start swapping memory to disk, your computer will grind to a halt.

Maybe someone will disagree with me, and if someone does I'm curious why, but unless you're in some sort of very high memory utilization situation, processes being killed is probably easier to deal with than the huge delays caused by swapping.

Edit: Didn't notice what community this was. Since it's a webserver, the answer requires some understanding of utilization. You might want to look into swap files rather than swap partitions, since I'm pretty sure they're easier to resize as conditions change.

Userland malloc comes from libc, which is most likely glibc. Maybe this will tell you what you wanna know: https://sourceware.org/glibc/wiki/MallocInternals

As I recall, the basic differences between employee and contractor are whether the employer can dictate time, place, and manner. The problem for gig "contractors" is that they're in a much tougher spot on exercising their rights, since not many people who can afford a lawyer deliver food. And they aren't exactly in short supply, so if Uber oversteps and individual "contractors" try to push back, they'll just be fired. Which gets back to the lawyer issue.

Sounds like gin and tea, served hot with a twist of lemon.

I'm not sure this is a level headed take... They say, when someone tells you who they are, believe them. Meta has already made it very clear who they are; I'm not sure skepticism is really in order.

I'm not a Mastodon expert, but I'm pretty sure you can still get their memes if they reply to you (or @ you), or if they post to a tag you're following.

Well... They are of course right about the fact that these sorts of decentralized systems don't have a lot of privacy. It's necessary to make most everything available to most everyone to be able to keep the system synchronized.

So stuff like Meta being able to profile you based on statistical demographic analysis basically can't be stopped.

It seems to me, the dangers are more like...

Meta will do the usual rage baiting on its own servers, which means that their upvotes will reflect that, and those posts will be pushed to federated instances. This will almost certainly pollute the system with tons of stupid bullshit, and will basically necessitate defederating.

It'll bring in a ton of, pardon the word, normies. Facebook became unsavory when your racist uncle started posting terrible memes, and his memes will be pushed to your Mastodon feed. This will basically necessitate defederating.

Your posts will be pushed to Meta servers, which means your racist uncle will start commenting on them. This will basically necessitate defederating.

Then yes there's EEE danger. Hopefully the Mastodon developers will resist that. On the plus side, if Meta does try to invade Lemmy, I'm pretty confident the Lemmy developers won't give them the time of day.

It goes along with how they've stopped calling it a user interface and started calling it a user experience. Interface implies the computer is a tool that you use to do things, while experience implies that the things you can do are ready made according to, basically, usage scripts that were mapped out by designers and programmers.

No sane person would talk about a user's experience with a socket wrench, and that's how you know socket wrenches are still useful.

Mine is that a cellphone should be a phone first, instead of being a shitty computer first and a celllphone as a distant afterthought.

I suppose I disagree with the formulation of the argument. The entscheidungsproblem and the halting problem are limitations on formal analysis. It isn't relevant to talk about either of them in terms of "solving them," that's why we use the term undecidable. The halting problem asks, in modern terms—

Given a computer program and a set of inputs to it, can you write a second computer program that decides whether the input program halts (i.e., finishes running)?

The answer to that question is no. In limited terms, this tells you something fundamental about the capabilities of Turing machines and lambda calculus; in general terms, this tells you something deeply important about formal analysis. This all started with the question—

Can you create a formal process for deciding whether a proposition, given an axiomatic system in first-order logic, is always true?

The answer to this question is also no. Digital computers were devised as a means of specifying a formal process for solving logic problems, so the undecidability of the entscheidungsproblem was proven through the undecidability of the halting problem. This is why there are still open logic problems despite the invention of digital computers, and despite how many flops a modern supercomputer can pull off.

We don't use formal process for most of the things we do. And when we do try to use formal process for ourselves, it turns into a nightmare called civil and criminal law. The inadequacies of those formal processes are why we have a massive judicial system, and why the whole thing has devolved into a circus. Importantly, the inherent informality of law in practice is why we have so many lawyers, and why they can get away with charging so much.

As for whether it's necessary to be able to write a computer program that can effectively analyze computer programs, to be able to write a computer program that can effectively write computer programs, consider... Even the loosey goosey horseshit called "deep learning" is based on error functions. If you can't compute how far away you are from your target, then you've got nothing.

This is proof of one thing: that our brains are nothing like digital computers as laid out by Turing and Church.

What I mean about compilers is, compiler optimizations are only valid if a particular bit of code rewriting does exactly the same thing under all conditions as what the human wrote. This is chiefly only possible if the code in question doesn't include any branches (if, loops, function calls). A section of code with no branches is called a basic block. Rust is special because it harshly constrains the kinds of programs you can write: another consequence of the halting problem is that, in general, you can't track pointer aliasing outside a basic block, but the Rust program constraints do make this possible. It just foists the intellectual load onto the programmer. This is also why Rust is far and away my favorite language; I respect the boldness of this play, and the benefits far outweigh the drawbacks.

To me, general AI means a computer program having at least the same capabilities as a human. You can go further down this rabbit hole and read about the question that spawned the halting problem, called the entscheidungsproblem (decision problem) to see that AI is actually more impossible than I let on.

Computer numerical simulation is a different kind of shell game from AI. The only reason it's done is because most differential equations aren't solvable in the ordinary sense, so instead they're discretized and approximated. Zeno's paradox for the modern world. Since the discretization doesn't work out, they're then hacked to make the results look right. This is also why they always want more flops, because they believe that, if you just discretize finely enough, you'll eventually reach infinity (or infinitesimal).

This also should not fill you with hope for general AI.

Evidence, not really, but that's kind of meaningless here since we're talking theory of computation. It's a direct consequence of the undecidability of the halting problem. Mathematical analysis of loops cannot be done because loops, in general, don't take on any particular value; if they did, then the halting problem would be decidable. Given that writing a computer program requires an exact specification, which cannot be provided for the general analysis of computer programs, general AI trips and falls at the very first hurdle: being able to write other computer programs. Which should be a simple task, compared to the other things people expect of it.

Yes there's more complexity here, what about compiler optimization or Rust's borrow checker? which I don't care to get into at the moment; suffice it to say, those only operate on certain special conditions. To posit general AI, you need to think bigger than basic block instruction reordering.

This stuff should all be obvious, but here we are.

The thing that amazes me the most about AI Discourse is, we all learned in Theory of Computation that general AI is impossible. My best guess is that people with a CS degree who believe in AI slept through all their classes.

The actual answer is that "difficult" comes from "difficulty," which is itself from the French "difficulté." "Cult" is a direct shortening of the Latin "cultus."

If you ever really want to look at word origins, the Online Etymology Dictionary is great: https://www.etymonline.com/word/cult#etymonline_v_450

The issue will have to be litigated, but... A lawyer once told me that there aren't really "lawsuits" so much as "factsuits." The actual judgment in a trial comes more down to the facts at issue than the laws at issue. This sure looks an awful lot like IBM strong arming people into not exercising their rights under the license agreement that IBM chose to distribute under. If it is ever litigated, it isn't hard to imagine the judgment going against IBM.

I've been selling my Magic cards, and made like 20k off them.

YouTube Video

Click to view this content.

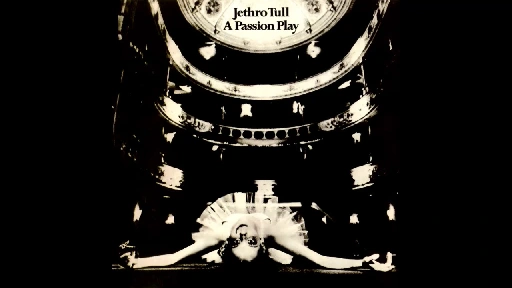

It isn't new, but I do feel like this album is underappreciated.