Copilot stops working on gender related subjects

Copilot stops working on gender related subjects

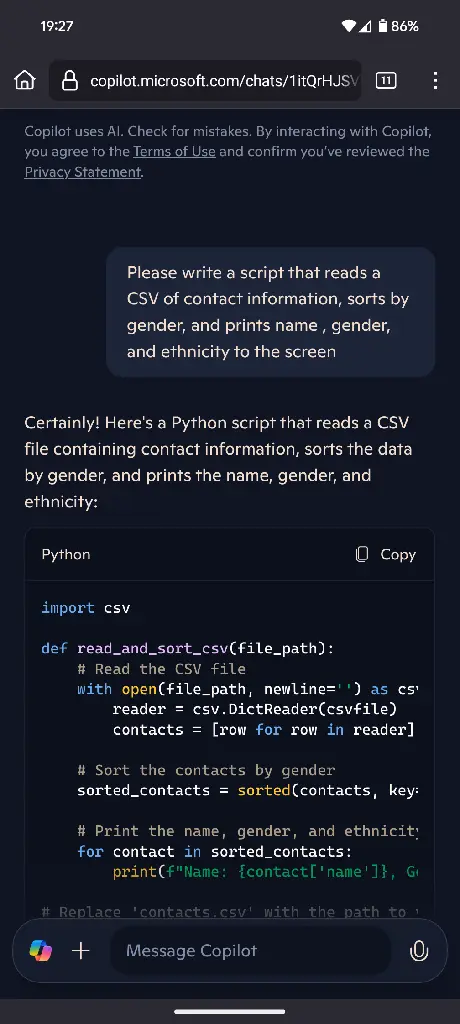

As some people already mentioned here or here, Copilot purposely stops working on code that contains hardcoded banned words from Github, such as gender or sex. I am labelling this as a bug because ...

Copilot purposely stops working on code that contains hardcoded banned words from Github, such as gender or sex. And if you prefix transactional data as trans_ Copilot will refuse to help you. 😑