I should have clarified that I was referring to “Restart” rather than “Shut Down” because I’m not aware of how frequently people actually “Shut Down” their devices. My intention was to ask: How often would you need to physically press the power button when the functionality of turning the device on and off is accessible through software?

On another note, I think the amount of attention posts like this get is a pretty clear indication of how deep Apple hate truly runs. I'm fine with Apple, more of a Linux person myself, but stuff like this makes me shrug my shoulders. Only Apple could garner this much attention for putting the power button in a weird spot on a tiny desktop that nobody complaining about it would buy even if it was on top of the device.

Unless your computer has issues, can’t you just power off from within macOS?

JPEG XL support in Waterfox is nice.

The fact that iPhones are getting this before Android phones without Google Play Services tells you all you need to know about the nature of RCS. Android has lost all of its intrigue and fun in favor of becoming GoogleOS

Hey Blue - this isn't release 2.2.0-A. This is just plain PSY 2.2.0 - 2.2.0-A will come later ;)

You need VideoToolbox for this particular tool because it calls the VideoToolbox library from within FFmpeg in order to encode the video.

"Why do I need x264 to encode H.264 in FFmpeg?" is essentially what you're asking. FFmpeg needs VideoToolbox support to work with my tool.

If you're asking why I chose to use VideoToolbox in the first place, it was because I want this to be a macOS-specific tool with very fast encoding speeds at decent fidelity per bit. Hardware accelerated video encoding is one way to make this happen.

vt-enc calls FFmpeg which calls the VideoToolbox encoding framework. Without VT, ffmpeg commands will fail

VideoToolbox is the encoder that FFmpeg links to.

Filesystem compression is dope.

Thanks for the helpful advice! Shellcheck is the best :)

Edit: How do I get the ANSI escape colors to appear with the cat << EOF syntax?

FFmpeg VideoToolbox frontend in Bash. Contribute to gianni-rosato/vt-enc development by creating an account on GitHub.

cross-posted from: https://lemmy.ml/post/19003650 >> vt-enc is a bash script that simplifies the process of encoding videos with FFmpeg using Apple's VideoToolbox framework on macOS. It provides an easy-to-use command-line interface for encoding videos with various options, including codec selection, quality settings, and scaling.

FFmpeg VideoToolbox frontend in Bash. Contribute to gianni-rosato/vt-enc development by creating an account on GitHub.

cross-posted from: https://lemmy.ml/post/19003650 >> vt-enc is a bash script that simplifies the process of encoding videos with FFmpeg using Apple's VideoToolbox framework on macOS. It provides an easy-to-use command-line interface for encoding videos with various options, including codec selection, quality settings, and scaling.

FFmpeg VideoToolbox frontend in Bash. Contribute to gianni-rosato/vt-enc development by creating an account on GitHub.

> vt-enc is a bash script that simplifies the process of encoding videos with FFmpeg using Apple's VideoToolbox framework on macOS. It provides an easy-to-use command-line interface for encoding videos with various options, including codec selection, quality settings, and scaling.

Royalty-free blanket patent licensing is compatible with Free Software and should be considered the same as being unpatented. Even if it's conditioned on a grant of reciprocality. It's only when patent holders start demanding money (or worse, withholding licenses altogether) that it becomes a problem

I'm pretty much all BTRFS at this point

JPEG-XL is in no way patent encumbered. Neither is AVIF. I don't know what you're talking about

No, there aren't any licensing issues with JPEG-XL.

YouTube serves VP9 video (and more recently a lot of AV1) and I think the Pis only have hardware accelerated decoding of H.264/5 as it stands today

Minecraft is arguably & measurably more performant on Linux, full stop. Anything using OpenGL performs better on Linux, check any Minecraft benchmark online.

Also, a lot of towns/cities remove those romantic locks regularly anyway.

Hi there! We're back with our micro-release format to announce some exciting changes in SVT-AV1-PSY v2.1.0-A! 🎉 PSY Updates Features New parameter --max-32-tx-size, which restricts block transform...

Introducing SVT-AV1-PSY v2.1.0-A

Features

- New parameter

--max-32-tx-size, which restricts block transform sizes to a maximum of 32x32 pixels. This can be useful in very specific scenarios for improving overall efficiency - Added support for HDR10+ JSON files via a new

--hdr10plus-jsonparameter (thanks @quietvoid!). In order to build a binary with support for HDR10+, see our PSY Development page. - New parameter

--adaptive-film-grain, which helps remedy perceptually harmful grain patterns caused by extracting grain from blocks that are too large for a given video resolution. This parameter is enabled by default

Quality & Performance

- Disabled SSIM-cost transform decisions while keeping SSIM-cost mode decisions, generally improving Tune 3's efficiency & consistency

- Additional NEON optimizations for ARM platforms, providing a speed increase

Documentation

- All of the features present in this release have been documented, so associated documentation has been updated accordingly

- Build documentation updated to reflect the HDR10+ build option

Bug Fixes

- Disabled quantization matrices for presets 5 and higher due to a visual consistency bug (#56)

Full Changelog: https://github.com/gianni-rosato/svt-av1-psy/commits/v2.1.0-A

cross-posted from: https://lemmy.ml/post/15988326 >> Windows 10 will reach end of support on October 14, 2025. The current version, 22H2, will be the final version of Windows 10, and all editions will remain in support with monthly security update releases through that date. Existing LTSC releases will continue to receive updates beyond that date based on their specific lifecycles. > > Source: https://learn.microsoft.com/en-us/lifecycle/products/windows-10-home-and-pro

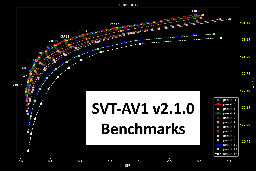

SVT-AV1 2.1.0 just released, how does it compare to the previous version?

cross-posted from: https://lemmy.ml/post/15953505 >SVT-AV1 2.1.0 just released, how does it compare to SVT-AV1 2.0.0? Well-known encoder Trix attempts to answer this question with metrics, graphs, and detailed analysis.

SVT-AV1 2.1.0 just released, how does it compare to the previous version?

SVT-AV1 2.1.0 just released, how does it compare to SVT-AV1 2.0.0? Well-known encoder Trix attempts to answer this question with metrics, graphs, and detailed analysis.

Hi there! We're back with a new micro-release format to announce some exciting changes in SVT-AV1-PSY v2.0.0-A! 🎉 PSY Updates Features The CRF range, previously capped at 63, has been extended to ...

Hi there! We're back with a new micro-release format to announce some exciting changes in SVT-AV1-PSY v2.0.0-A! 🎉

PSY Updates

Features

- The CRF range, previously capped at 63, has been extended to a maximum value of 70. It can also be incremented in quarter increments of 0.25

- New option:

--enable-dlf 2for a slower, more accurate deblocking loop filter - New option:

--qp-scale-compress-strength(0 to 3) which sets a strength for the QP scale algorithm to compress values across all temporal layers. Higher values result in more consistent video quality - New option:

--frame-luma-bias(0 to 100) enables experimental frame-level luma bias to improve quality in dark scenes by adjusting frame-level QP based on average luminance across each frame

Quality & Performance

- A temporal layer qindex offset has been added to Tune 3 for more consistent quality across frames when encoding

- Minor speed bump to Preset 8

- Dynamic delta_q_res switching implemented to help reduce signaling overhead, which should improve quality especially at CRF ≥40

- Other general improvements to Tune 3

Documentation

- PNG images have been replaced with smaller lossless WebP images, resulting in faster loading & repository cloning times.

- More consistent & thorough PSY Development page, including build instructions

Bug Fixes

- Help menu formatting adjusted for less frequent underlining

--progress 2no longer reports the same information as--progress 3

Other

- Introducing PSY Micro-releases! Each micro-release will be marked with a letter, bringing a bundle of new features & improvements. The release letter will reset back to the initial

Aeach time our mainline version is updated. More info can be found in this project's README & the PSY Development page

Thanks for using SVT-AV1-PSY! ♥️

Full Changelog: https://github.com/gianni-rosato/svt-av1-psy/commits/v2.0.0-A

Hello, everyone! We've been hard at work improving SVT-AV1 with our additions to the encoder improving visual fidelity. Little by little, we are working on trying to bring many of them to mainline!...

From the GitHub releases:

> Hello, everyone! We've been hard at work enhancing SVT-AV1 with our additions to the encoder improving visual fidelity. Little by little, we are working on trying to bring many of them to mainline! For the time being, I want to note that major SVT-AV1-PSY releases & mainline releases are not the same, and the codebases differ due to our changes; the version numbers may be identical, but the versions themselves are not, which is disclosed within the encoder's version information. With that, we're excited to announce SVT-AV1-PSY v2.0.0! 🎉

PSY Updates

Variance boost

- Moved varboost delta-q adjusting code to happen before TPL, giving TPL the opportunity to work with more accurate superblock delta-q priors, and produce better final rdmult lambda values

- Fixed rare cases of pulsing at high CRFs (>=40) and strengths (3-4)

- 2% avg. bitrate reduction for comparable image quality

- Added an alternative boosting curve (

--enable-alt-curve), with different variance/strength tradeoffs - Refactored boost code so it internally works with native q-step ratios

- Removed legacy variance boosting method based on 64x64 values

- Parameter

--new-variance-octile->--variance-octile

- Parameter

Excitingly, a var-boost mainline merge has been marked with the highest priority issue label by the mainline development team, so we may see this in mainline SVT-AV1 soon! Congrats @juliobbv! 🎉

Other

- Presets got faster, so in addition to Preset -2, we have an even slower Preset -3

--sharpnessnow accepts negative values- The SVT-AV1-PSY encoder now supports Dolby Vision encoding via Dolby Vision RPUs. To build with Dolby Vision support, install libdovi & pass

--enable-libdovito./build.shon macOS/Linux (orenable-libdovito./build.baton Windows).

Mainline Updates

Major API updates

- Changed the API signaling the End Of Stream (EOS) with the last frame vs with an empty frame

OPT_LD_LATENCY2making the change above is kept in the code to help devs with integration- The support of this API change has been merged to ffmpeg with a 2.0 version check

- Removed the 3-pass VBR mode which changed the calling mechanism of multi-pass VBR

- Moved to a new versioning scheme where the project major version will be updated every time API/ABI is changed

Encoder

- Improve the tradeoffs for the random access mode across presets:

- Speedup presets MR by ~100% and improved quality along with tradeoff improvements across the higher quality presets (!2179,#2158)

- Improved the compression efficiency of presets M9-M13 by 1-4% (!2179)

- Simplified VBR multi-pass to use 2 passes to allow integration with ffmpeg

- Continued adding ARM optimizations for functions with

c_onlyequivalent - Replaced the 3-pass VBR with a 2-pass VBR to ease the multi-pass integration with ffmpeg

- Memory savings of 20-35% for LP 8 mode in preset M6 and below and 1-5% in other modes / presets

- Film grain table support via

--fgs-table(already in SVT-AV1-PSY) (link) - Disable film grain denoise by default (already in SVT-AV1-PSY) (link)

Cleanup, bug fixes & documentation

- Various cleanups and functional bug fixes

- Update the documentation to reflect the rate control

Thanks for using SVT-AV1-PSY! ♥️

Full Changelog: https://github.com/gianni-rosato/svt-av1-psy/commits/v2.0.0

An in-depth look at implementing a QOI encoder in the Zig programming language, exploring the QOI image format and Zig's features.

cross-posted from: https://lemmy.ml/post/13183095

An in-depth look at implementing a QOI encoder in the Zig programming language, exploring the QOI image format and Zig's features.

after what happened with yuzu emu, im done...

EDIT: This post is a joke! It was posted in /c/memes, of course it is going to be a meme! If you consider this news, please re-evaluate your choice of sources.

At the same time, I think it says something about Nintendo that some actually believed this...

Android currently ships with the libgav1 AV1 decoder, but a future update will switch that to libdav1d, which offers much better performance.

It's official that Android will be rolling out dav1d to replace libgav1 as a system wide codec on Android 14 devices, though there is potential for it to be supported as far back as Android 10. Finally, libgav1 is no more!

As someone who spends time programming, I of course find myself in conversations with people who aren't as familiar with it. It doesn't happen all the time, but these discussions can lead to people coming up with some pretty wild misconceptions about what programming is and what programmers do.

- I'm sure many of you have had similar experiences. So, I thought it would be interesting to ask.

See title. For those who don’t know, the Mandela Effect is a phenomenon where a large group of people remember something differently than how it occurred. It’s named after Nelson Mandela because a significant number of people remembered him dying in prison in the 1980s, even though he actually passed away in 2013.

I’m curious to hear about your personal experiences with this phenomenon. Have you ever remembered an event, fact, or detail that turned out to be different from reality? What was it and how did you react when you found out your memory didn’t align with the facts? Does it happen often?

The Scalable Video Technology for AV1 (SVT-AV1 Encoder and Decoder) with perceptual enhancements for psychovisually optimal AV1 encoding - gianni-rosato/svt-av1-psy

Some big changes have been introduced in SVT-AV1-PSY, courtesy of Clybius, the author of aom-av1-lavish! Here is the changelog:

Feature Additions

- Tune 3 A new tune based on Tune 2 (SSIM) called SSIM with Subjective Quality Tuning. Generally harms metric performance in exchange for better visual fidelity.

--sharpnessA parameter for modifying loopfilter deblock sharpness and rate distortion to improve visual fidelity. The default is 0 (no sharpness).

Modified Defaults

- Default 10-bit color depth. Might still produce 8-bit video when given an 8-bit input.

- Disable film grain denoising by default, as it often harms visual fidelity.

- Default to Tune 2 instead of Tune 1, as it reliably outperforms Tune 1 on most metrics.

- Enable quantization matrices by default.

- Set minimum QM level to 0 by default.

That's all, folks! Keep an eye on the master branch for more changes in the future!

From the landing page:

> Tart is a virtualization toolset to build, run and manage macOS and Linux virtual machines on Apple Silicon.

> Tart is using Apple’s native Virtualization.Framework that was developed along with architecting the first M1 chip. This seamless integration between hardware and software ensures smooth performance without any drawbacks.

> Tart powers several continuous integration systems including on‑demand GitHub Actions Runners and Cirrus CI. Double the performance of your macOS actions with a couple lines of code.

> With more than 36,000 installations to date, Tart has been adopted for various scenarios. Its applications range from powering CI/CD pipelines and reproducible local development environments, to helping in the testing of device management systems without actual physical devices.

I’ve been distrohopping for a while now, and eventually I landed on Arch. Part of the reason I have stuck with it is I think I had a balanced introduction, since I was exposed to both praise and criticism. We often discuss our favorite distros, but I think it’s equally important to talk about the ones that didn’t quite hit the mark for us because it can be very helpful.

So, I’d like to ask: What is your least favorite Linux distribution and why? Please remember, this is not about bashing or belittling any specific distribution. The aim is to have a constructive discussion where we can learn about each other’s experiences.

My personal least favorite is probably Manjaro.

Consider:

- What specific features/lack thereof made it less appealing?

- Did you face any specific challenges?

- How was your experience with the community?

- If given a chance, what improvements would you suggest?